AI research company OpenAI released ChatGPT as a free research preview on Nov. 30, 2022. What started off as a tool for generating human-like text and engaging in conversational dialogue quickly became a behemoth of 400 million weekly users worldwide, with hopes of hitting 1 billion users by the end of 2025.

Unsurprisingly, a whopping 26% of US teens are users. A Pew Research Center survey found that a quarter of U.S. teens ages 13 to 17 have used ChatGPT for schoolwork—double the share in 2023. As AI continues to integrate itself in classrooms, so do disagreements over the best way to navigate it.

Personally, I believe AI has no place in academics, at least how it’s being used now. The reality is that AI is a tool we are going to have to live with for the rest of our lives. We can’t revert back to our pre-AI days, and we can’t completely shun it either. While it isn’t necessary to learn how to use AI—in fact, another PRC study found that 66% of U.S. adults have never used an AI chatbot—learning to do so means being at the forefront of the future.

In an era of deepfakes and misinformation, it’s incredibly important that we learn to spot when AI is being used unethically, which can be more complicated than it seems. AI is one of those tricky little subjects where responsible uses range from avoiding it completely or only using it to assist you with tasks.

Like most students at Central, I’ve used AI before, whether it be for brainstorming research questions or finding a word on the tip of my tongue. I was enthusiastic about ChatGPT’s potential for helping me with schoolwork when it came out my freshman year, and I had no qualms about using it. The way I saw it, if I didn’t use it to write my English themes, then I was using it ethically. However, my mindset has radically transformed since then.

I found that the more I used AI, the more difficult it was for me to accomplish simple tasks like summarizing an article or coming up with my own research question for a history essay. This lack of originality has been found in many other AI users: research from Carnegie Mellon University and Microsoft suggests that the more people use AI, the less critical thinking they do.

This isn’t to say AI isn’t entirely without its uses. ChatGPT is horrible at physics, but I’ve found that Gemini is quite good at explaining basic concepts. AI can function as a personal tutor to students who don’t have access to a human tutor. Although Central has afterschool tutoring, additional help from AI doesn’t hurt.

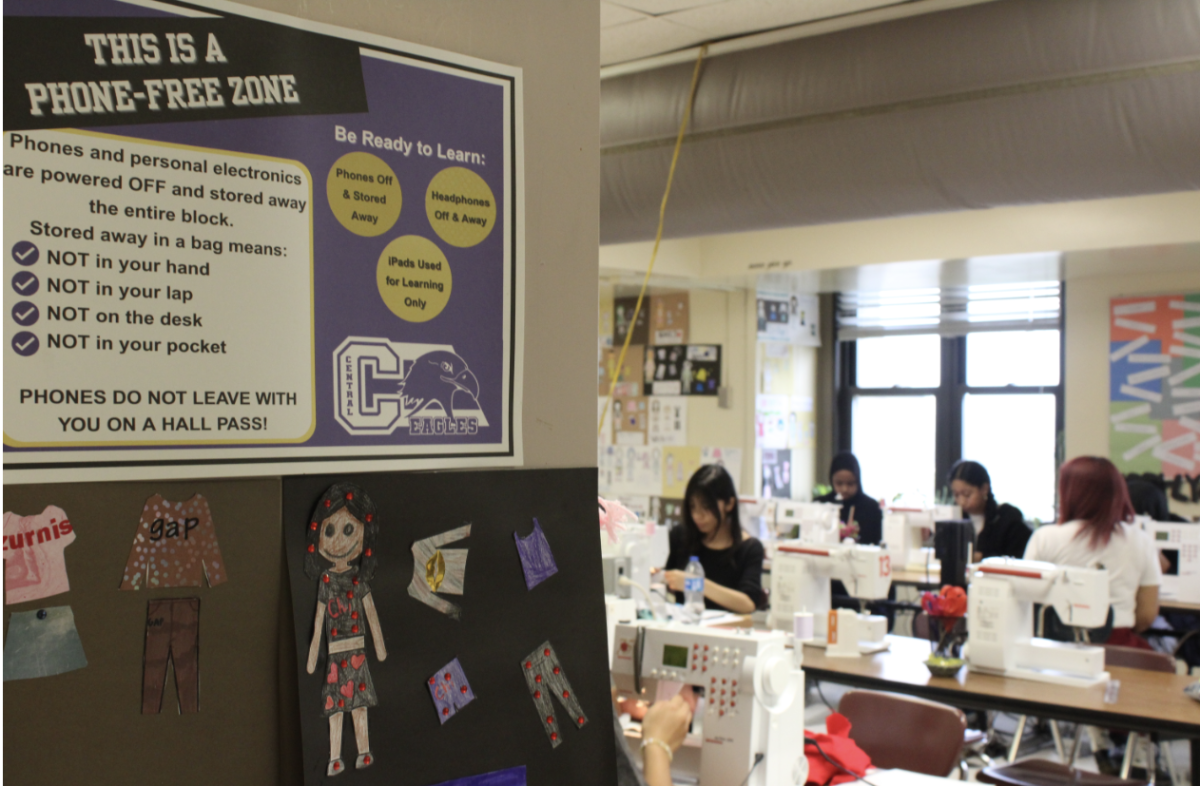

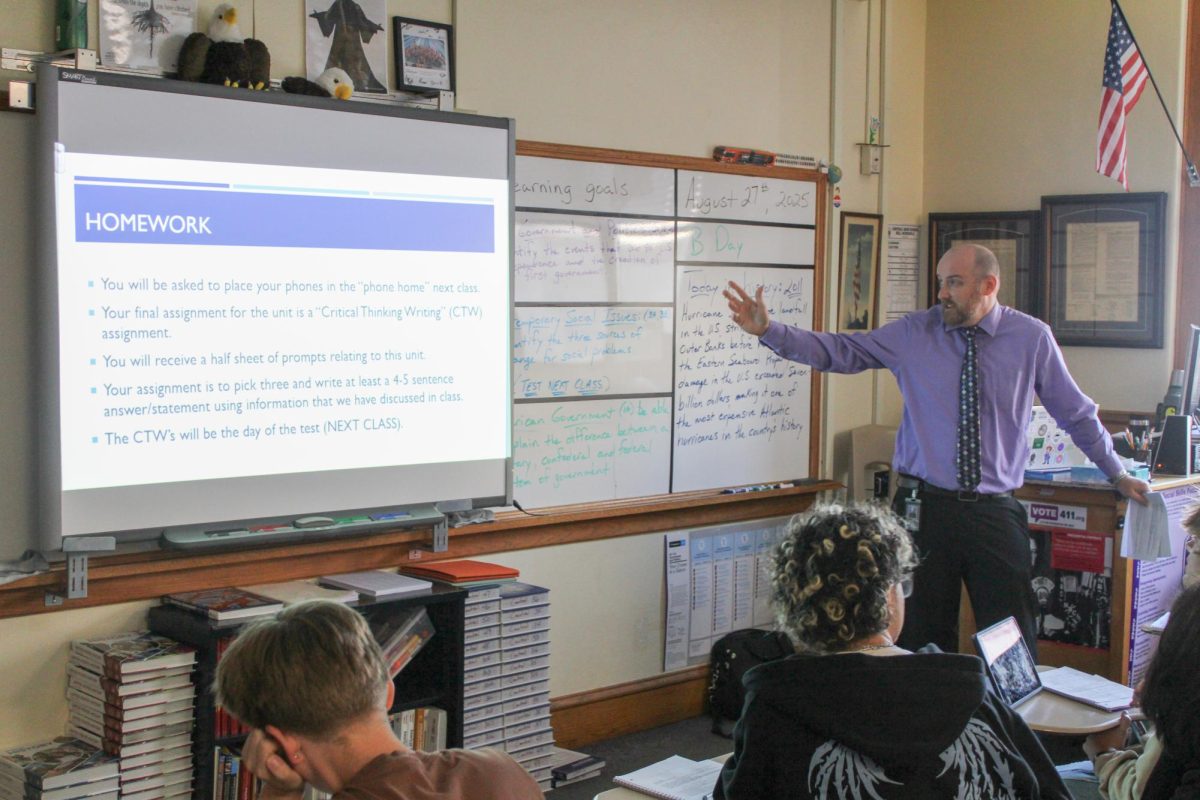

Overall, I believe that educators would benefit from scrutinizing AI’s impact on both learning and teaching and then imposing regulations on its use.

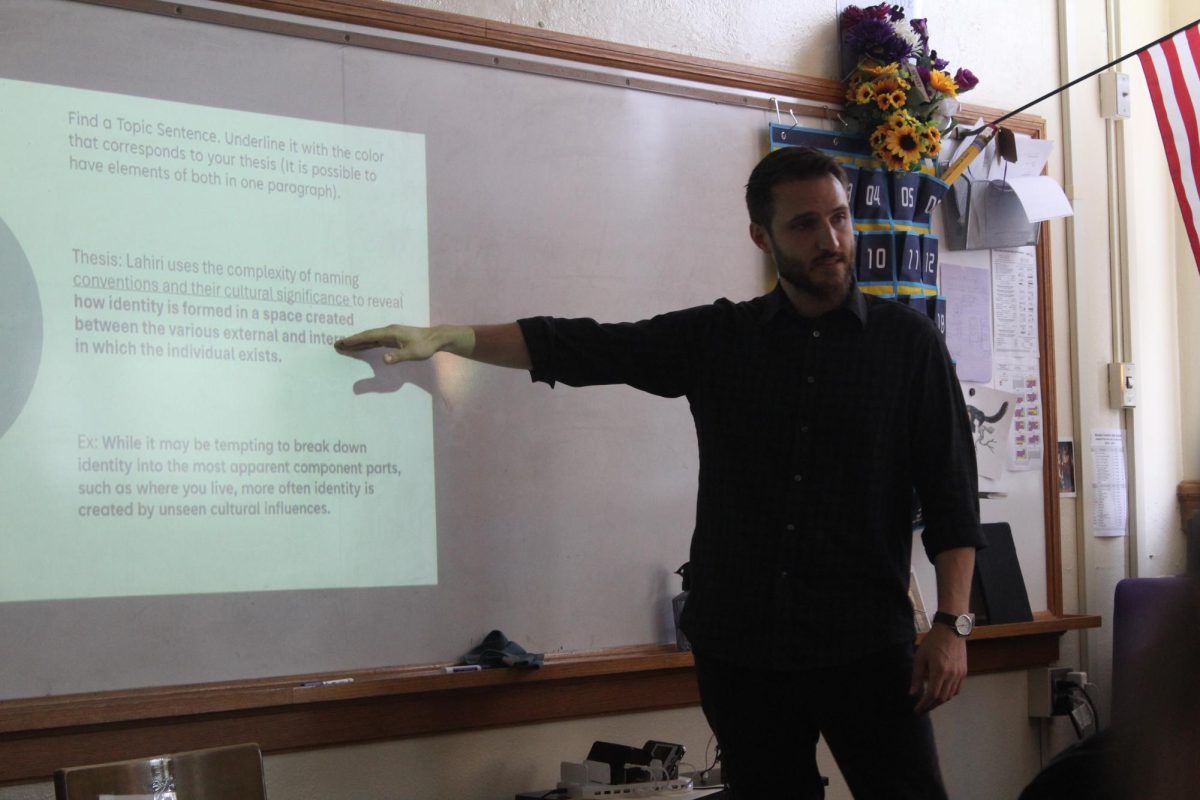

At Central, I find that many teachers go as far as to encourage the use of AI. AI can be a fantastic tool for students, depending on what it’s being used for, but I don’t think it’s right to encourage students to use it to write labs, or come up with research questions.

It used to be that a student would pick a topic they were interested in, for example the Roman empire, and then conduct research into that topic so they could come up with a carefully crafted research question aligning with their interests. With AI, a student simply has to input that topic into a chat and the AI will generate a list of possible research questions. This way, a student does not develop their research or writing skills.